Transcript: Harnessing Copilot and other gen-AI tools to increase digital accessibility efficiency

Contents

About this transcript

This is a transcript of the Harnessing Copilot and other gen-AI tools to increase digital accessibility efficiency presentation. To make the transcript more readable as a standalone resource I have added images and videos from the presentation within the transcript. Since I described the parts relevant to the presentation within the transcript there will be some duplication.

You may prefer to use the radio buttons below to hide the images and videos to save bandwidth or reduce potentially duplicate content.

Video overview

[Computer chime noise]

Audio Description: This introduction has an audio description. A cartoon of a robot representing Microsoft Copilot appears on screen. The robot waves to the audience.

Matthew Deeprose: Can generative AI tools such as Copilot help us make content more accessible? In this video I’ll cover four scenarios I’ve used this year.

Audio Description: The robot transforms captions into a transcript.

Matthew Deeprose: Taking a set of captions and turning it into a formatted transcript.

[Sparkly noise]

Audio Description: The robot transforms an image into a text.

Matthew Deeprose: Writing alternative text for images.

[Sparkly Noise]

Audio Description: Another robot labelled Whisper AI appears.

[Reverberating noise]

Together they transform a podcast into a transcript.

Matthew Deeprose: Transforming a podcast into a written transcript with speaker attribution and formatting.

[Sparkly noises]

Audio Description: The copilot robot creates text, images, tables and graphs.

Matthew Deeprose: And generating sample content for use in accessibility workshops.

[Squirrely electronic noise]

I’ll share how you can recreate these scenarios…

Audio Description: The background darkens. Articles about concerns around climate sustainability and bias appear above Copilot robot's head.

Matthew Deeprose: And touch upon some of the challenges and opportunities of using generative AI.

[Deflated robot noise]

Audio Description: The background clears. A laptop displaying the presentation webpage appears beside the Copilot robot.

[Robot appearence chime]

Matthew Deeprose: On my website matthewdeeprose.github.io you can find the presentation recording, a list of the prompts I share, example files I used in the presentation, and links to further resources.

Audio Description: The laptop is replaced on screen by a mobile phone displaying the transcript.

Matthew Deeprose: You will also find an enhanced transcript of the presentation with images and example videos added so that if you prefer to read the content, you can.

Audio Description: The screen clears. Audio transcription ends.

Matthew Deeprose: Let’s get started!

Welcome

Hi, I'm Matthew Deeprose from the University of Southampton. For those who don't see me, I'm a white male in my late 40s. I'm wearing glasses, a navy blue suit, with a cornflower-coloured shirt and matching pocket square. My pronouns are he/him.

Introduction

Can we harness Copilot and other generative AI tools to increase digital accessibility efficiency?

To open the theme of this presentation and put it in context, let's start with a tweet from @McgarDana posted in 2021. It reads, "Accessibility isn't more work, you were just cutting corners before. The work was incomplete". She is saying that a web page, document, or online service isn't ready until everyone can use it, regardless of any disability or impairment.

Context

But for us in universities, many of our colleagues are still unaware of what digital accessibility is. I'm sure you've heard responses like "no one ever told me about this before", or "I haven't got time for this" even from our most well-meaning colleagues.

And with statutory legislation such as the Public Sector Bodies (Websites and Mobile (Applications) Accessibility Regulations in the UK, the latest 2022 revision I have a screenshot of on screen. And other developments, like our Blackboard Universal Standards that set an expectation of meeting a minimum 70% accessibility score for online teaching materials. We have a growing expectation for staff across our institution to engage with accessibility.

For many this will be "more work" than they might have anticipated previously, and extra time and resource will not necessarily be allotted for this.

Workload is a significant matter for universities, our university is running a workload principles project which aims to improve the way in which workload is managed, which will hopefully lead to improved wellbeing and engagement of the University community.

Harnessing AI: The New University Digital Strategy

Concurrently, our new university digital strategy has a vision to harness AI in ways that are ethical and helpful... So, the question is, can we use AI to reduce effort or workload, or otherwise increase efficiency with any of the digital accessibility workflows we might expect our colleagues to complete?

Why Copilot?

Today, while I will mention other tools, I'll mainly focus on Microsoft Copilot. If your institution is a Google shop rather than Microsoft, I expect the Google Claude experience will be similar.

-

Copilot uses a customised version of GPT-4 from Open AI.

-

As a Microsoft institution, we can log into it with our University account. This gives us a level of protection where our data isn't saved and won't be used to train AI models.

-

Microsoft can't view the chat data either. Usage isn't linked to individuals, and our university's identity is removed before the data is sent.

-

Using our institutional accounts, we have thirty responses a day we can use, compared with the standard ten.

Using Copilot

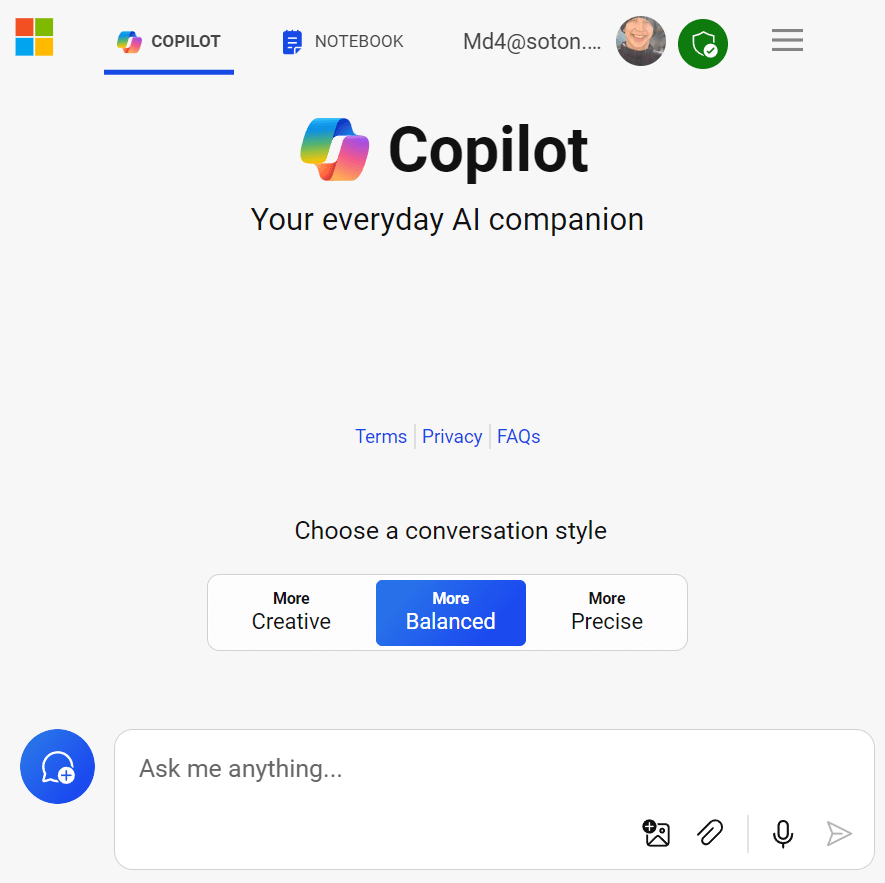

On the website copilot.microsoft.com there are two main interfaces for Copilot. Both can create text and images, but there are some differences between them.

Copilot "Default"

The default, which seems just to be named Copilot, is aimed at short questions and answers. As such there is an 8000-character limit for the prompts you can write. Microsoft's guides say 4000, but the web page is set to accept 8000.

My main reason to use this variant is that we can upload both images or documents (up to 1 MB in size) as part of our prompts. It accepts many types of document format, from standard office document formats to programming and scripting languages, markup content, LaTeX content and so on.

There's also the option to change conversation style, to switch from diverse and creative to deterministic and focussed. This is similar to the temperature parameter you may be familiar with in ChatGPT.

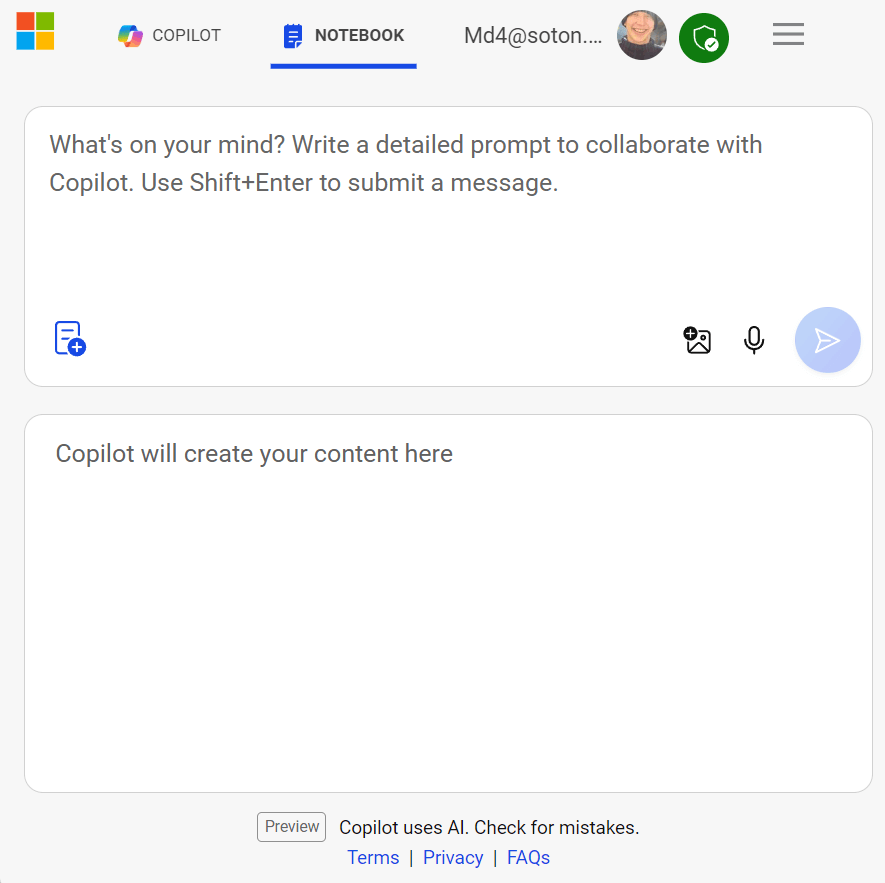

Copilot Notebook

Copilot Notebook is the variant I'll be focussing on. Apparently, it's designed for rewriting and iterating on prompts that you create.

More importantly for my use-cases it has an 18,000-character limit for the prompts you can enter and can return answers of between 9 and 10,000 characters.

One limitation is that you can only attach images, although there is a larger file size limit of 50 MB.

So, having set out the context, it's time to review the four scenarios for which I've found copilot to be helpful. The first is creating a transcript from corrected video captions.

Scenario 1: Creating a transcript from captions

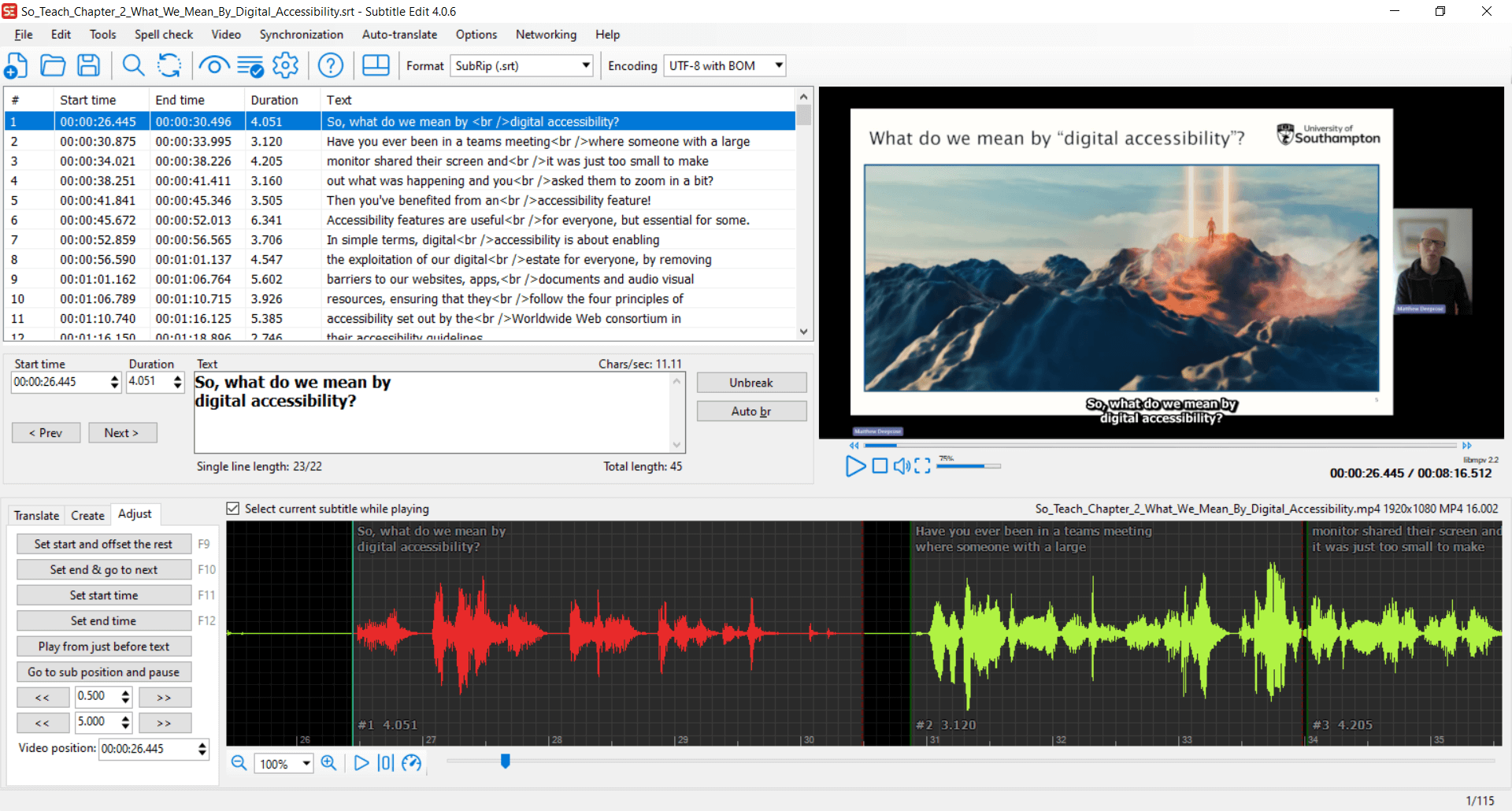

This story began back in January this year. My colleague Dr. Zubair Shafiq and I were creating an accessibility video for our doctoral college. We had broken the content into ten-minute chunks and were working on each separately, which we then pulled together in a final edit. We had been using a brilliant tool called SubtitleEdit to add, format, and time the captions.

Having completed the captions, we wanted to create a transcript.

Benefits of Transcripts

Transcripts have many benefits:

-

Not everyone wants to or can watch a video. A transcript allows more options to engage with your content.

This feedback from a member of our Neurodivergent Staff Group explains this well:

The thing that I find most annoying is the way online training is often set up. I don't learn well with videos, flashy moving images on screens, or endless things to click on to flip or open or whatever. It can get disorientating, and it makes it harder to take in the information because of the demands of interacting with the screen. My attention goes on interacting with the screen rather than taking in the information.

I'd rather just be given the information transcript-style so I can just read it (with images where they'll add something useful). I connect information into a whole better when I can see it all in one go, jump back and forth to double check things, and read it/process it at the speed I need to.

Feedback from a member of University of Southampton's Neurodivergent Staff Group

-

If the transcript is suitable for publishing online, it may help to improve Search Engine Optimisation, bringing a greater audience to your content.

-

Having a transcript can help you to more easily create further resources like blog posts, articles, and so on from that transcript source, depending on the context and purpose of your original content.

-

From an accessibility point of view, as well as being a benefit, depending on the level of compliance we are aiming for, we are required to provide equivalent alternatives to media content, such as a well-prepared transcript.

Using Subtitle Edit

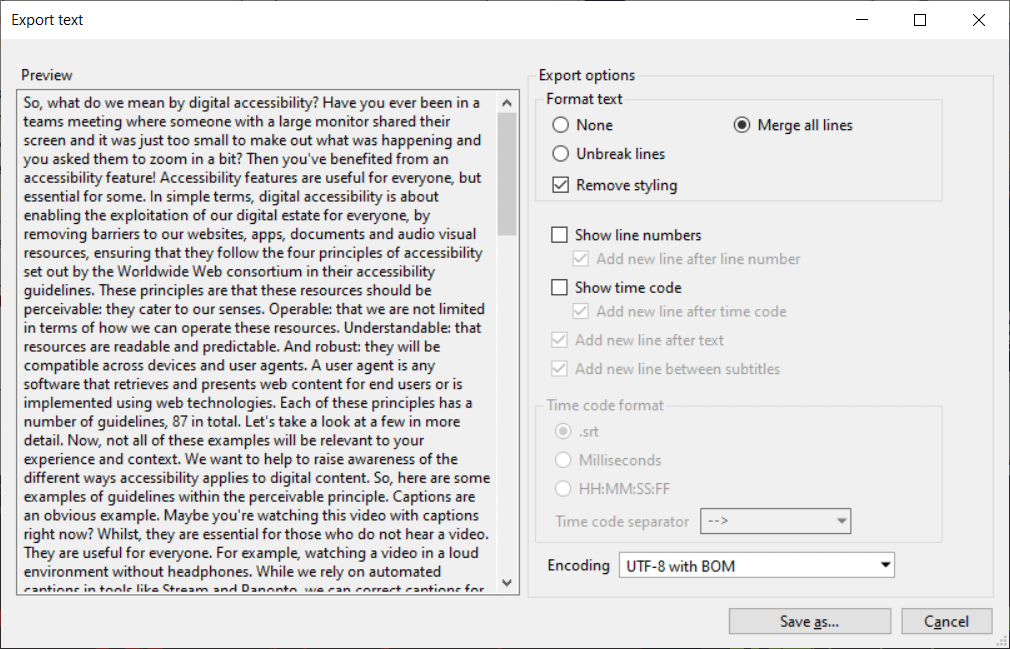

Going back to my scenario, Subtitle Edit has an export text feature to turn the captions into a single block of text.

That block of text is not very readable. While I have the punctuation that I had added when creating the captions, there are no paragraphs.

Editing that text to set it into paragraphs is a time-consuming and boring task. On screen, I have a recording of me going through that text and tidying it into sensible paragraphs. It's a slow process. It would be even slower if I had not written the original text myself.

Using Copilot for Transcript Formatting

Could Copilot make this faster?

I wrote a prompt asking it to reformat my transcript to be more readable. I specified that it could add headings, set paragraph breaks, and use bullet points where appropriate. In my initial testing, I found that it rewrote the text of my transcript, so I added further information explaining that the words and the order in which they appear should remain unchanged.

Instructions:

Please format the following transcript to be more readable.

Use headings, paragraph breaks, and bullet points as appropriate.

Important: because this is a transcript, it is essential that the text itself is kept verbatim. Please make sure you do not change any of the original words or the order in which they are presented.

Transcript:

...

On screen, I'm sharing a recording of Copilot notebook running that prompt. It is reformatting the transcript as I asked, adding paragraph breaks, headings, and using lists where appropriate. Of course, I had to check the results, and I changed them to suit my preference, but it saved me a lot of time and boredom.

Final Result

The result is on screen. I'm showing a Word document version of the transcript, with the navigation pane open to show that I have set the headings using heading styles so they will be usable by assistive technologies.

Tips When Making Transcripts

A few tips from my experience of making transcripts:

- If you are the creator of the content, in your original video aim to describe what is happening on screen as much as possible. This should help you to avoid the requirement of a separate audio description of any unvoiced but important action. It should also make your transcript more meaningful to those who are reading it.

- For example, rather than saying "as you can see, the graph shows we have a significant number of students who disclose a disability" you could say instead "over the past five years around one fifth of our students have disclosed a disability each academic year".

- Transcripts can be more useful if you add hyperlinks to other websites or documents that you reference in your original media.

- While not a requirement, if you add relevant images, with appropriate alternative text, the transcript will become even more useful especially for those who just prefer to engage with content in written form over video.

Scenario 2: Writing alternative text

Next, can generative AI help us to write alternative text?

What is Alternative Text?

You may hear alternative text shortened to "alt text". I'm likely to use these terms interchangeably. To me, they mean the same thing. It's the written description of an image that we write for those who do not see it, helping them to get the intended meaning of that image. This might be those with visual impairments who use a screen reader, an assistive technology that speaks aloud what is on screen.

Benefits of Alternative Text

Alternative text benefits those who personalise their learning experience using alternative formats from Blackboard, such as the audio version. Those who listen to content rely on alternative text being provided for images; otherwise, they'll miss out on important details.

Alt text also appears when an image link is broken, or users have turned off images.

Those on pay-as-you-go internet may disable images on web pages to save bandwidth or data costs. If alternative text has been added, they won't miss out. A report last year from JISC found that around a third (34%) of UK students had problems with mobile data costs.

When we don't add alternative text to images, we're creating a barrier for those who don't see them.

Writing Effective Alt Text

When we write alt text, we should consider:

-

the context in which we use the image,

-

our intended audience - who is our target reader or listener,

-

and what is the function or purpose of that image?

We may write different alternative text for the same image when we use it in different contexts, for different audiences, and depending on the purpose of the image.

How much to write?

While the general recommended length for alternative text is about two sentences, when an image is more complex like a chart or graph, we may need to write a longer description of the image.

I use this guide to explain when we may need to write a long description for an image.

- If we're just using the image for visual interest, we'll mark it as decorative.

- If the image replicates text already in the document, then we should add a caption describing the essential content and context and use the alt text feature to mark the image as decorative.

- If the image supports or rationalizes what is in the text, then I should write a brief alt text describing essentials of the image and use a caption or title.

- If the image goes further than what is in the text, or I want students to use the graph in some way, then I should write a brief alternative text describing the essentials, add a caption, and then provide a full description elsewhere in the document.

- Ideally, I should also provide any tabular data that was used to generate the graph or chart if my image was one of those.

Using AI Tools for Alt Text

Our colleague Dr. Tharindu Liyanagunawardena, Digital Accessibility Officer at University of Reading, shared with me a few months ago this Image Accessibility Creator tool from Arizona State University (ASU).

This tool allows you to upload an image file. It will use the GPT-4o model provided by OpenAI to create a long description, a short alternative text, and it will list any words it can detect in the image. You can also provide further information about the image to help it write a better description. With this detail, we may have all the information we need to create a suitable alt text based on our earlier considerations.

Demonstration of Image Accessibility Creator from ASU

On screen, I'm demonstrating how, having uploaded a cartoon image of a cat sleeping on the dashboard or cockpit of a spaceship, it describes the image impressively, sharing all the details, colours, and mood of the image.

Because ASU is using OpenAI's application programming interface (API), images are not kept or used by OpenAI.

The ASU tool is really impressive, and it's currently open to anyone to use, but since it may be put behind a login at any time, I worked on a prompt to get Copilot to give just as good an answer.

Copilot Prompt for Alt Text

The prompt I have is based on one that Purdue University shared and I've fine-tuned a little. I'll read out the prompt:

"You are a university-level instructor working to ensure images in your online course materials conform to Web Content Accessibility Guidelines at Level AA. You need to accommodate for disabilities and impairments such as blindness, low vision, and colour vision deficiency to ensure equitable access to module content for all students.

You will be provided with an image. Please return a succinct title, alternative text (alt text), and a long description. You will also separately list verbatim all words that appear in the image.

The alt text should be no more than two sentences and should briefly describe the meaning conveyed by the image. The detailed description may be one or more paragraphs and must describe the image in great detail so that anyone who does not see it can gain a full understanding of everything in the image. Please format the detailed description such that it will be easily scannable. Use headings, paragraph breaks, and lists as appropriate."

I also recently added:

Use active voice. Use UK English

... because I noticed it would often write in passive voice. Active voice aids understanding. By default, Copilot writes in US English, so I want it to write in UK English.

This is just a generic prompt; we could tailor it for each image, based on the context, function, and audience for that image. While this gives slightly better results in terms of formatting, the ASU tool is a lot faster.

Reviewing Copilot's Image Descriptions

Let's review the strengths and weaknesses of Copilot's image description with three example images.

Example 1: Line Graph

Read long description of image "Example 1".

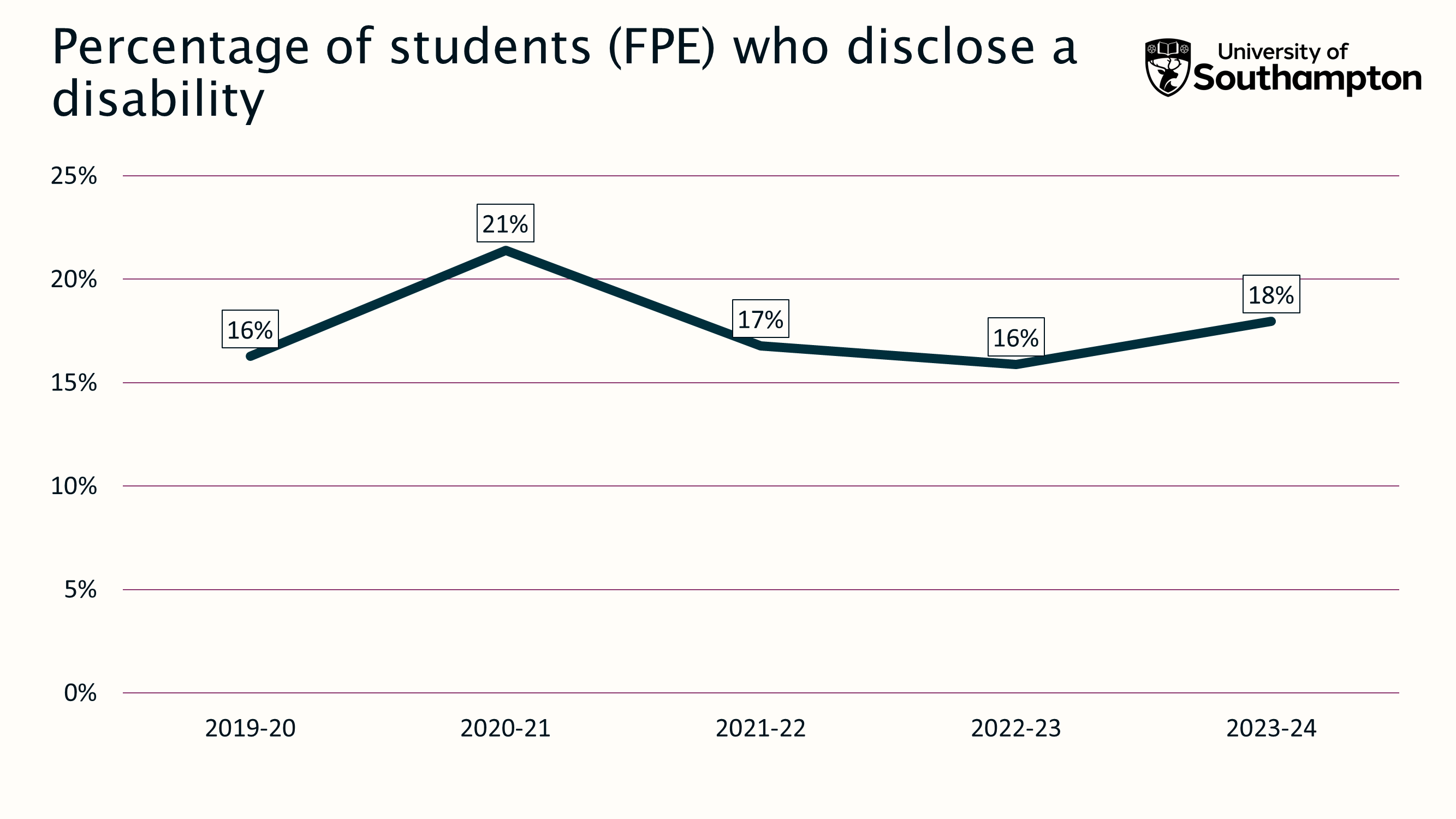

On screen, a line graph shows the percentage of "full person equivalent" students disclosing a disability at the University of Southampton from 2019/20 to 2023/24. Between one fifth and one sixth of students disclosed a disability during this period.

Read Copilot's description of Example 1

Student Disability Disclosure Rates at the University of Southampton.

Line graph showing the percentage of full-time equivalent students who disclose a disability at the University of Southampton from 2019 to 2024, with a slight increase over time.

The image is a line graph titled "Student Disability Disclosure Rates at the University of Southampton”. It represents the percentage of full-time equivalent (FPE) students who disclose a disability at the University of Southampton over five academic years, from 2019-20 to 2023-24.

The horizontal axis (X-axis) indicates academic years, starting from 2019-20 and ending at 2023-24.

The vertical axis (Y-axis) shows percentages, beginning at 0% and increasing in increments of 5% up to 25%.

For each academic year, there is a data point connected by lines indicating the trend:

- In 2019-20, approximately 16% of students disclosed a disability.

- In 2020-21, this increased to around 21%.

- A slight decrease occurred in 2021-22, with about 17%.

- The following year, 2022-23, showed another decrease to approximately 16%.

- An upward trend is projected for 2023-24, with an estimate nearing 18%.

Copilot gave a pretty accurate title of "Student Disability Disclosure Rates at the University of Southampton."

I did have two issues with the alternative text.

-

It correctly identifies it as a line graph, but it says it is about Full Time Equivalent students rather than Full Person Equivalent,

-

and while it says that the amount is increasing over time, it would be better to give us an idea of the proportion of students.

The long description is broken into sections for a general description, details of the graph, and an examination of the data points and trend.

-

It makes the same error around full time equivalent rather than full person equivalent students.

-

It describes the axis of the graph in detail, which for this graph I don't think is really necessary in the context for which I was using the graph, but that's still for me to judge.

-

And for the description of the data points it adds words like "approximately" and "around" the figures, which may not be necessary.

Still, I think it's a good start, which could be quickly reviewed for accuracy by the author who will use this graph. My own alt text would be very similar:

"Line graph showing percentage of 'full person equivalent' students who disclose a disability at University of Southampton. Between 2019 and 2023 the proportion remains between one fifth and one sixth of students."

For the long description, I set out the result for each academic year as an unordered list. This semantic detail will make it easier to anticipate and review the description for someone using a screen reader. It would also be easier to visually scan for readers as well. So, for example, I have:

- "Academic year 2019-20, 16%".

Suggested alt text by Ally's AI Alt Text Assistant

Last week, a new feature, the AI Alt Text Assistant, was launched for Ally. I've turned it on in our testing environment. For the same graph, it gives the following suggested alternative text:

"A graph with numbers and a line".

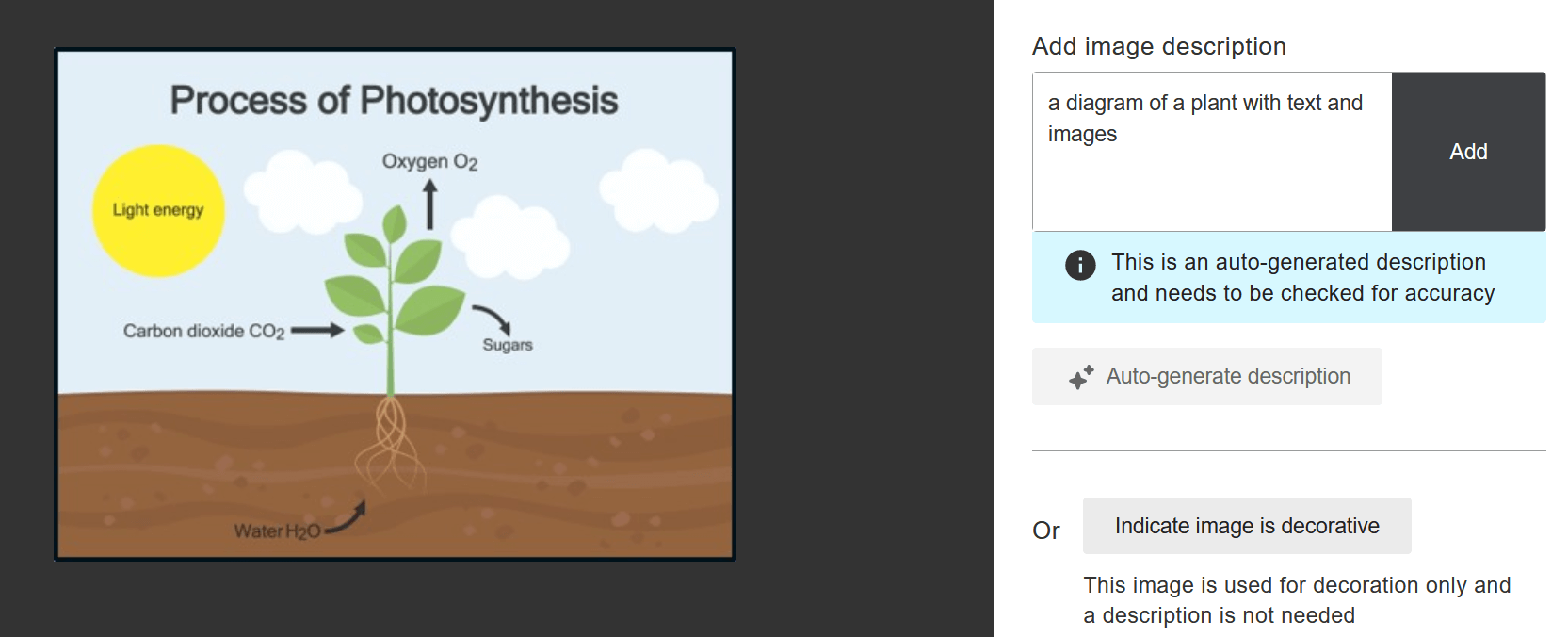

Example 2: Photosynthesis Diagram

Read long description of image "Example 2".

Read Copilot's description of Example 2

Photosynthesis Process Diagram.

A diagram illustrating the process of photosynthesis, showing how a plant takes in light energy, carbon dioxide, and water to produce oxygen and sugars.

This image is a simple, educational diagram that explains the process of photosynthesis. It uses visual elements like arrows and labels to depict how plants convert light energy, carbon dioxide (CO2), and water into oxygen (O2) and sugars.

The background consists of a brown ground at the bottom and a blue sky with white clouds above. In the center, there’s a green plant with roots embedded in the ground and leaves reaching towards the sky.

- A yellow sun on the top left corner is labelled "Light energy."

- An arrow points from the sun to the green plant indicating that light energy is absorbed by the plant.

- On the left side, an arrow labelled "Carbon dioxide CO2" points towards the plant.

- The roots are labelled "Water H2O," indicating absorption from the soil.

- On top right corner an arrow pointing away from plant is labelled "Oxygen O2."

- Another arrow pointing away from plant on right side indicates "Sugars," showcasing products of photosynthesis.

This detailed layout ensures that students understand not only what photosynthesis entails but also visually interpret how each element contributes to this natural process.

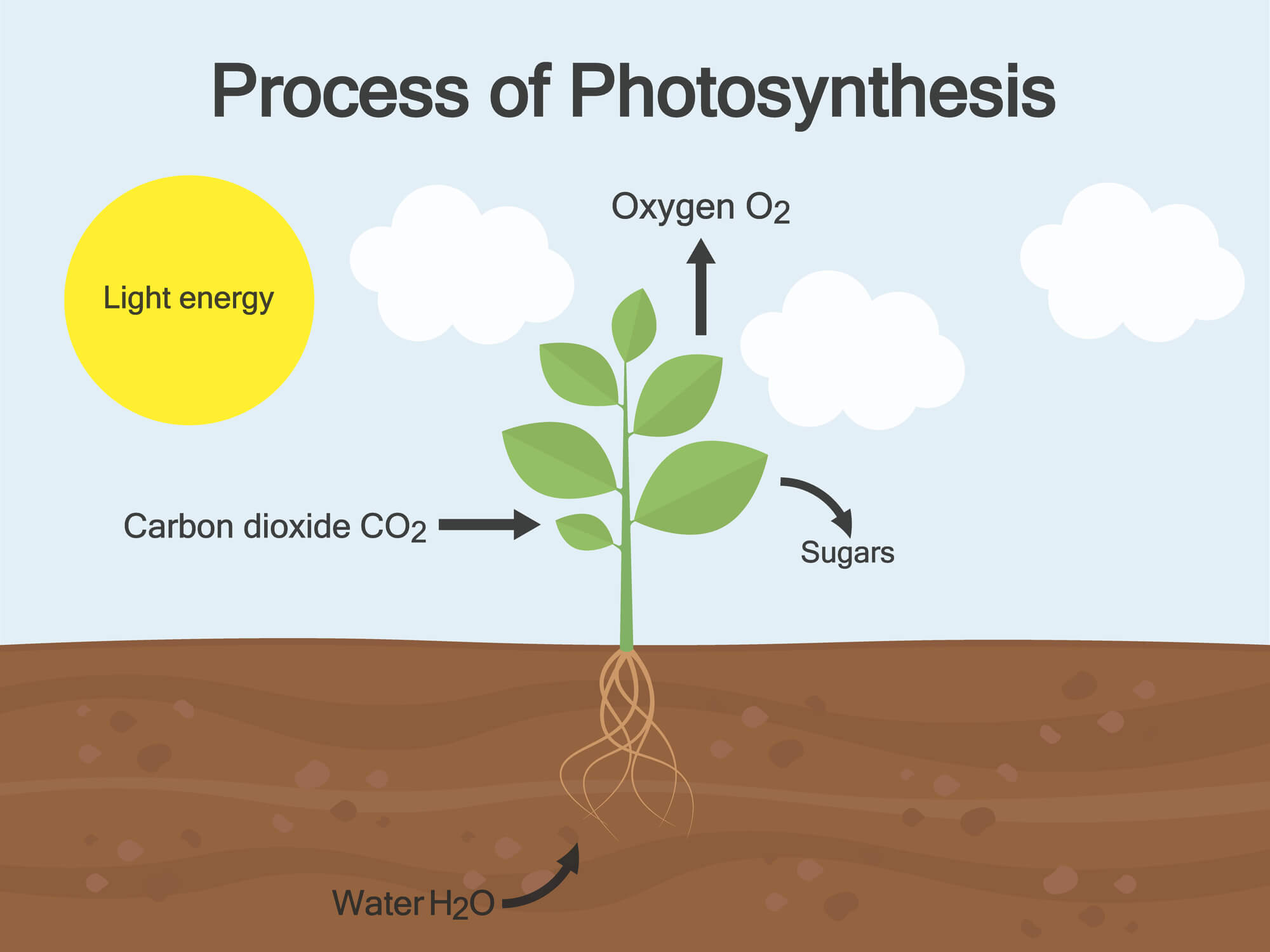

My next example is a basic diagram of the process of photosynthesis aimed at school children. Copilot's title is fine: "Photosynthesis Process Diagram".

The alt text is also pretty good: "A diagram illustrating the process of photosynthesis, showing how a plant takes in light energy, carbon dioxide, and water to produce oxygen and sugars."

However, when I show you my own description, I will highlight how I could make it a little better.

For the detailed description, Copilot produced an overview, a section describing the visual elements, and a section covering annotations and labels. I won't read out the whole description, which you can find in the slide deck, but I will point out a few aspects of the description to examine a little further. The description of the ground being brown, the sky being blue, and so on aren't necessary for the purposes of this description. In the way it is explaining the annotations and labels, for example, "The roots are labelled 'Water H2O', indicating absorption from the soil." could be described in a simpler way, as I'll cover in my own description.

While my alt text is three sentences, I would probably keep this as alt text and not write a longer description. My description is:

"Process of Photosynthesis. The plant takes in water through its roots, carbon dioxide and light energy through its leaves. The leaves release oxygen into the air and generate sugars as part of the process."

In this way, I believe I've covered the essential information in this diagram, without getting into the nitty gritty of describing labels and arrows, or colours.

Suggested alt text by Ally's AI Alt Text Assistant

Ally's AI Alt Text Assistant gives the following suggested alternative text:

"A diagram of a plant with text and images".

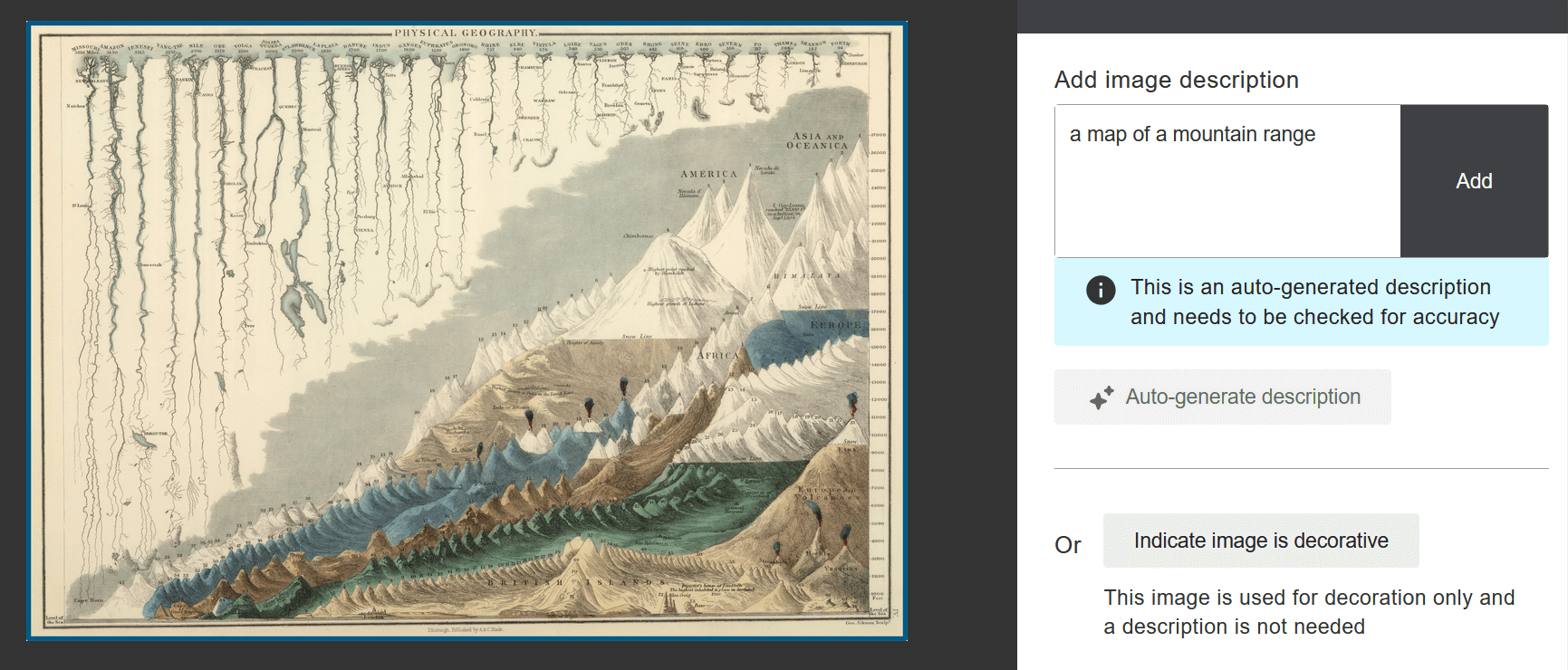

Example 3: 19th Century Physical Geography Map

Read long description of image "Example 3".

Read Copilot's description of Example 3

19th Century Physical Geography Map.

This is a 19th-century physical geography map, providing a cross-section view of various geographical features, including mountain ranges, plateaus, and rivers. The image includes labels for different regions and landforms.

The image is a detailed coloured illustration of a physical geography map from the 19th century. It provides a cross-section view of various geographical features, including mountain ranges, plateaus, and rivers.

The top section of the image displays numerous vertical lines representing rainfall measurements across different regions. Each line has varying lengths indicating the amount of rainfall.

This section showcases a range of mountains with their peaks labelled with names and elevations. To the left, there are blue shaded areas depicting bodies of water or rivers flowing between mountain ranges. In the centre, there is an elevated plateau labelled "Plateau of Thibet" with significant height compared to surrounding areas. To the right side, there are several high peaks including "Dhawalagiri" and "Mount Everest," with Mount Everest being the tallest among them.

The bottom right corner illustrates an erupting volcano labelled as "Active Volcano." Adjacent to this is a brown area representing lower elevation or plains leading up to another mountain range.

This map serves as an educational tool to understand historical perspectives on geography and how physical features were visualized in past centuries. It’s relevant for its depiction of natural phenomena like rainfall patterns and topographical diversity across different landscapes.

The final example is one suggested by my colleague Pete Boorman.

It's a chart comparing lengths of selected rivers and heights of selected mountains, from "General Atlas Of The World", published in 1854.

Along the top of the image are depictions of rivers like the Yangtze, Volga, and Ganges with their lengths listed in miles. Towards the bottom are hills and mountains from different regions and their height measured in feet.

Copilot suggests a good title, 19th Century Physical Geography Map.

The alt text, describing it as, "a 19th-century physical geography map, providing a cross-section view of various geographical features, including mountain ranges, plateaus, and rivers", is pretty good too.

Copilot broke down the long description into parts which I'm sharing across two slides. I won't read out everything on the page, but you can refer to my slide deck for this detail.

The structure of the long description is an overview, followed by descriptions of the top, middle and bottom sections of the image, And a commentary on the relevance of the image.

A big mistake is at the top section. It describes it as representing rainfall measurements, rather than rivers and their lengths.

The long description continues with an overview of the different sections, while The general description is ok, it gives specifics that are not actually in the image, like mentioning Mount Everest and the Plateau of Thibet.

So, it looks as though Copilot is confabulating these details. It has generated plausible-sounding but potentially inaccurate or fabricated information.

Suggested alt text by Ally's AI Alt Text Assistant

What did Ally's AI Alt Text Assistant suggest? "A map of a mountain range".

Reflecting upon Copilot's usefulness for alternative text

So, what lessons and tips can we draw from this? The images I used were all troublesome in one way or another. In my experience so far, images like photos or pictures, like the illustration of a cat sleeping in a space ship that we saw earlier work better, but I wanted to use images of the type we are more likely to use in Higher Education.

-

I think that Copilot and similar tools can help us to go onto write better short and long descriptions, by presenting the structure of the long description that we can then improve. Or by adjusting a description to make it relevant to our context, audience and purpose.

-

The generic prompt is just a start, it could be fine-tuned or we could ask follow up questions.

-

Of course, we need to consider bias and confabulation - particularly if the description infers meaning or content that is not in the image.

-

I can see that using AI to create descriptions may frustrate colleagues into just doing it manually as they get a better understanding of what makes good alt text.

-

As ever, subject matter experts should be writing or reviewing the descriptions, however they have been initially created.

Learning to write image descriptions

There's lots of resources out there to help you to write image descriptions.

I particularly recommend:

Described

TextBox digital's "described" service provides guidance and worked examples of writing alternative text for different types of image.

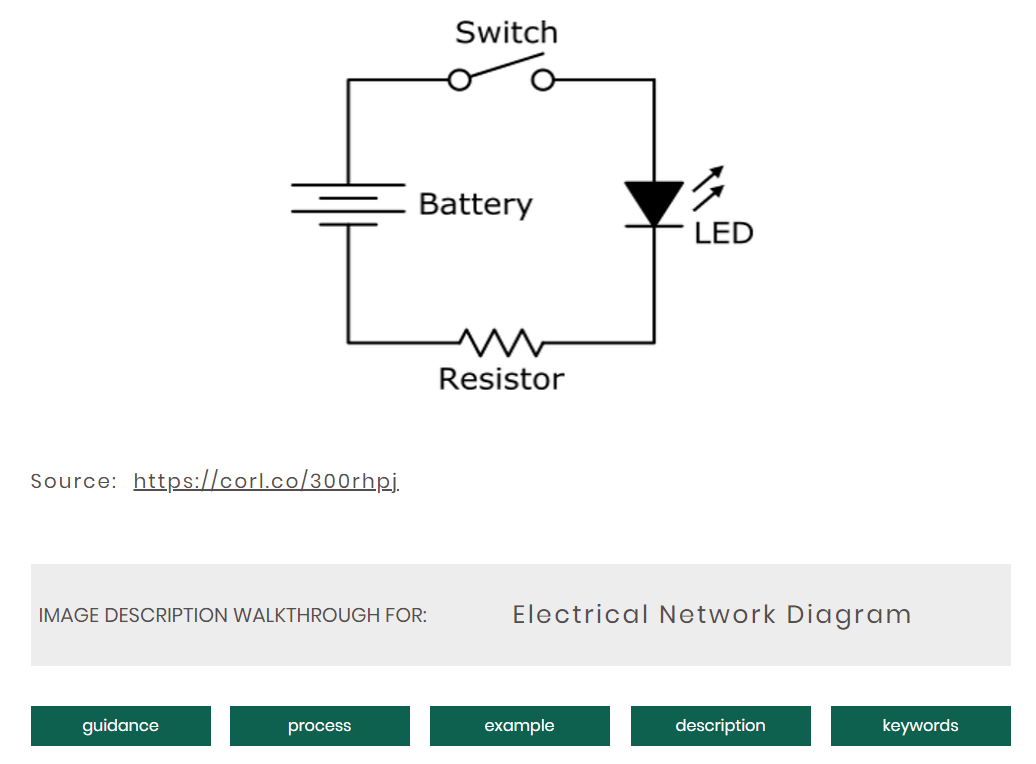

On screen, I'm listing the types of image they cover, examples include Diagrams, graphs, maps, and scientific images such as circuit diagrams.

I'm now sharing a list of examples from the scientific section. This includes astrophotography, chemical element notation and other scientific image types.

When you select an image type, Described provides an overall set of guidance, a step by step process, and a worked example with final complete description. Once you have a process you can follow it really helps you to unlock what once might have been a mystery and can make it seem simple. On screen, I have a screenshot of part of the explanation for describing circuit diagrams.

Scenario 3: Podcast transcription

In our third scenario we're going back to transcription, but from a different angle. This one is a bit more of a work in progress.

The Meliora Podcast from the Sustainability and Resilience Institute at the University of Southampton examines topics surrounding sustainability.

On screen, the podcast is advertised in our internal university "staff matters" newsletter. However, one of our colleagues in our communications team who writes the newsletter is profoundly deaf and cannot listen to the podcast. She and the podcast host, Professor Simon Kemp, got in touch with me to ask what options were available to make transcripts for the podcast. Simon has a small fund for paying students as casual staff to edit the podcast but has no extra funding to pay someone to make a transcript.

Finding Whisper Desktop

Since the podcast is an MP3 file, what tools did we have already that could help make a transcript?

We use Microsoft Stream, but it can only create captions for video files, not audio files. Converting an MP3 to a video file would work but requires additional software.

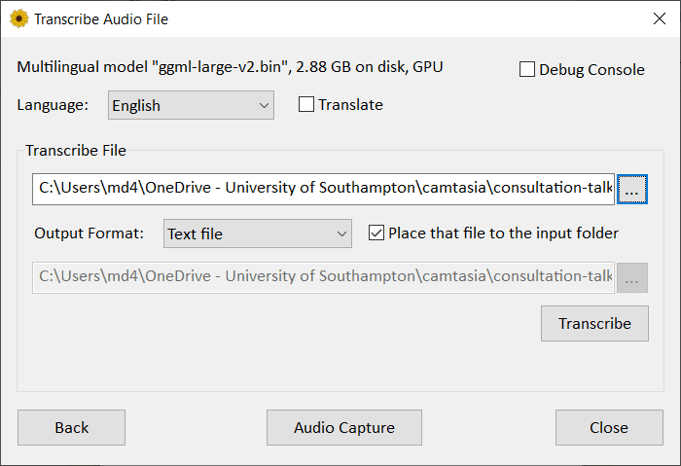

I came across Whisper Desktop. It's a Windows open-source application designed to run with the open-source automatic speech recognition system "Whisper" created by OpenAI. If you have a good graphics card, this tool can run on your Windows computer and transcribe audio or video files, or live speech captured through your microphone. It can output that transcription to caption files or plain text. It supports 99 languages and it can translate between languages.

Whisper AI Transcription Process

During transcription, you can view a live preview. On screen, I'm sharing the preview while it transcribed one of Simon's podcasts. Whisper processes the content in 30 second chunks. The words it detects appear within the preview. Each word has a colour going from green to yellow to red, indicating its confidence for accuracy. It took about 9 minutes to transcribe an hour-long podcast on my work computer. The transcription has a good level of accuracy. Unlike YouTube transcription, you do get punctuation.

As an aside, having confidence levels for the transcription might be useful for our Lecture capture recordings. If we could focus on words with low confidence levels, it might make caption correction faster.

Improving Readability

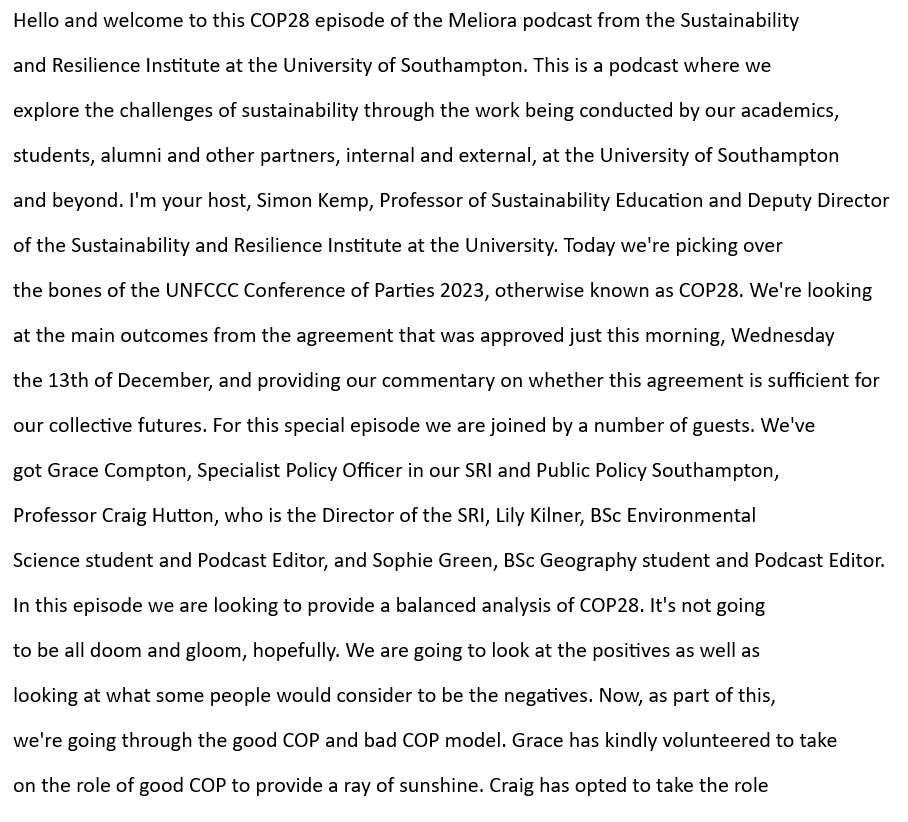

Just like in our first scenario, we have a big lump of unformatted plain text. Not very readable. In this podcast, there are five different speakers. Can we get attribution added so we know who is speaking?

Speaker Attribution

I wrote a prompt for Copilot. I gave the speakers' names and who is the first speaker. I asked it to write the name of the speaker followed by a colon in bold formatting to appear before each person speaks. I'm asking it to do this based on the text transcript, not the audio file.

Instructions:

I will paste a transcript from a podcast. The speakers' names are:

Simon Kemp

Grace Compton

Craig Hutton

Lily Kilner

Sophie GreenI would like you to add speaker attribution to the text I will paste, so we know who is talking. Do this by writing the name of the speaker followed by a colon in bold formatting when each speaker speaks. The first speaker is Simon Kemp.

Transcript:

...

On screen, I'm sharing a screen recording of Copilot going through that task. It is adding the name of each speaker in bold before they speak.

Formatting

It would be great if we could add the formatting detail like headings, paragraph breaks, and so on that we saw in our first scenario.

I have another screen recording showing copilot reformatting that transcript with the speaker attribution added, it's using headings, bulleted lists and so on. I did try asking Copilot to do speaker attribution and formatting with a single prompt, but I found the results to be much worse than doing them separately.

Before and After

Now I'm showing the before and after, our initial block of plain text, in comparison with a transcript that has headings, more meaningful paragraph breaks, bullet points, and speaker attribution. It's definitely not perfect, but it would take someone much less time to fine-tune and correct this transcript using our adjusted version, in comparison to our original block of text.

Future Prospects

So far, this is just a proof of concept.

In my testing, I had an 18,000-character limit for what I could ask Copilot, and it would not give me an answer longer than 10,000 characters. And the podcast transcript was 60,000 characters long!

I'm hoping to discuss with my colleagues if there is an opportunity for us to use the Copilot API to break these limits.

Ultimately, could we create an endpoint where a user could upload their MP3 file, and then have Whisper create the transcript and Copilot format it, rather than requiring them to do multiple separate steps?

I think the creation of an end-to-end solution like that could help because it is a good story to tell, from our profoundly deaf colleague being unable to listen to a university podcast, to an AI-based solution that can help to create a readable transcript with a much lower level of human effort to correct and adjust the final transcript.

Scenario 4: Creating Sample Content for Accessibility Remediation Training

The final scenario is around creating what I call "sample content".

I'm meeting teams around the university who want an introduction to digital accessibility. We have online training available, but meeting teams and discussing accessibility within the context of their work, with examples to work through, can be quite effective.

I've also found it's better not to use "real-world" content. If we don't approach this in the right way, colleagues might feel that their content is being singled out. But creating example content that is more meaningful than a chunk of " Lorem Ipsum placeholder text" can be a challenge.

Creating "sample content" with the help of Copilot

I'll be working with a group of project managers and analysts soon. I asked Copilot to create a project update document for a fictitious project that aims to make project managers more efficient by using cats. I stated that the document should have:

- An overview of the project's aims

- Red, green, amber ratings for time and cost

- A list of deliverables

- A roadmap

Please create a project update document for a fictious project that aims to make university project managers more efficient in delivery of project outcomes using cats.

The outline should include:

- an overview of the project aims.

- red/amber/green ratings for time and cost.

- a list of project deliverables.

- a project roadmap.

I could then adjust the content it gave me by adding some accessibility issues myself that the group could remediate while we covered the topics.

On screen, I'm browsing through my document. I've added defects like:

- Aspects of low contrast

- Red, amber, green rating using colour alone to express meaning

- A table without proper headings

- Poorly crafted links

- Lists without list semantics

The content itself is slightly facetious, but I've avoided using real-world content and Lorem Ipsum placeholder text. I've got elements that would appear in a project document, and it took 10 minutes altogether to create.

Generating an image, encountering bias?

At the end, I added an image that I asked Copilot to create of a project manager being assisted by a cat.

Copilot will always give a choice of four images, but on my original attempt, it gave me four white male project managers, perhaps an example of bias within the model.

So, I adjusted my prompt to specify the gender and ethnicity of the project manager.

Conclusion

Now I'm going to draw us to my conclusion.

Generative AI and climate sustainability

Of course, we must consider the sustainability of AI tools as we face a climate emergency that will have an unthinkable impact on our lives within the next 20 years.

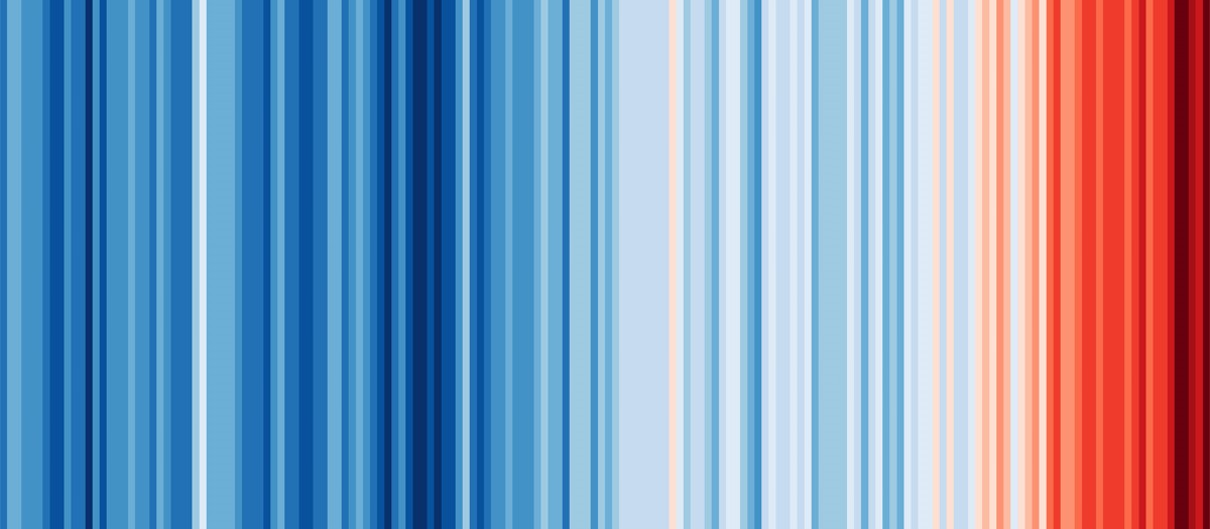

The "Climate Stripes" image by Professor Ed Hawkins shows global temperature rise over nearly two centuries. Each stripe represents a year's average temperature relative to the overall period. Blue stripes indicate cooler years, while red stripes show hotter years. The deep red stripes on the right highlight recent rapid warming.

A single generative AI exchange can consume the equivalent of half a litre of fresh water for data centre cooling and consume 100 times more energy than one Google search. Understandably, there is a great deal of concern around the impact of AI on the climate.

A post in Disconnect Blog, titled " Generative AI is a climate disaster," explains that Google and Microsoft's emissions are increasing between 30 and 50% despite their goals to be climate neutral by 2030.

Bias in Generative AI

Bias has long been a concern of generative AI. I have some recent news articles:

- The University of Washington found that Chat GPT was biased against resumes with credentials that implied the applicant had a disability.

- A recent paper from Cornell about the biases in generative AI.

- A recent blog post by Baldur Bjarnason comparing generative AI with asbestos. Baldur explains that just as asbestos use was rationalised as having positives that outweighed the negatives until it was banned in the US only this year, generative AI is in the same position, promising some positives and with advocates either ignoring the negatives or saying that the positives outweigh them.

Challenges and Opportunities

Now for some challenges and opportunities, these are more open questions for institutions to consider, than answers.

Reproducibility

Different answers from Copilot to the same question can occur due to model changes (e.g., switching from GPT-4 to GPT-3.5), adjustments to the model, or slight variations in punctuation or spacing in our initial question. Is reproducibility important? How do we explain this to users?

Dealing with constant change

Likewise, the tools are changing frequently. In the weeks between me starting work on this presentation and today, both Chat GPT 4.0 mini was released, as was Ally's AI Alt Text Assistant.

Maturity for specific workflows

The best scenarios involve manipulating text and using prompts. To mature these solutions, we might provide more "turn-key" options, possibly using local Large Language Models. Copying and pasting prompts into a browser is still immature and not necessarily user-friendly.

All of the scenarios I've looked at today are about reducing effort and time, but verification and completion by a subject matter expert is still required.

Anxiety and Ethics

There is rightfully a lot of anxiety around AI, particularly in terms of the ethics of how large language models are trained, their sustainability, and impact on the climate.

Can we account for our carbon usage of generative AI just as we do for university buildings and travel?

Opportunities to gain buy-in?

Finally, among all these challenges and concerns, I think that positive uses of AI to remove accessibility barriers is a good story to tell that may help to gain buy-in from university stakeholders, both toward digital accessibility and using AI to reduce the digital accessibility workload.

Your ideas

I hope that by sharing these simple ideas, we can continue the conversation and learn from each other about your ideas and uses for generative AI that help us in our digital accessibility work. I really want to learn what others in our community have been doing.

Links

At the end of the slide deck, which you can download from the community site, you'll find links to everything I referred to.

Thanks for listening.

Transcript ends.

Links from the final slide

- "Accessibility isn't more work, you were just cutting corners before. The work was incomplete".

- The Public Sector Bodies (Websites and Mobile Applications) Accessibility (Amendment) (EU Exit) Regulations 2022

- Blackboard Universal Standards 2024/25 (Internal link)

- Workload Principles Project (Internal link)

- Digital Strategy Plan - Draft (Internal link)

- Copilot

- Copilot Notebook

- Subtitle edit

- Arizona State University Image Accessibility Creator

- Described

- Complex images - making sense for accessibility

- NWEA Image descriptions for Assessments

- Meliora podcast

- Whisper Desktop

- My quick guide on setting up Whisper Desktop

- Generative AI is a climate disaster

- ChatGPT is biased against resumes with credentials that imply a disability - but it can improve

- Bias in Generative AI

- AI and Asbestos: the offset and trade-off models for large-scale risks are inherently harmful.

Long Descriptions of Images

Long Description of Example 1

Line graph showing percentage of 'full person equivalent' students who disclose a disability at University of Southampton in academic years starting between 2019 and 2023.

- Academic year 2019-20: 16%.

- Academic year 2020-21: 21%.

- Academic year 2021-22: 17%.

- Academic year 2022-23: 16%.

- Academic year 2023-24: 18%.

Long Description of Example 2

The alt text suffices for the long description, but for completeness I'm duplicating it here.

Process of Photosynthesis. The plant takes in water through its roots, carbon dioxide and light energy through its leaves. The leaves release oxygen into the air and generate sugars as part of the process.

Back to Example 2.Long Description of Example 3

The image is a detailed comparative chart from 1854 illustrating the lengths of several notable rivers and the heights of various mountains and hills worldwide. The chart is split into two sections. The upper section depicts rivers, and the lower section juxtaposes mountains and hills.

Rivers

The upper part features an array of rivers displayed from the top edge, each vertically extended downward. The rivers are labeled with their names and lengths at the top, with intricate branching patterns. The rivers include the Missouri-Mississippi, Amazon, Yenisei, Yang-tse-Kiang, Nile, Obi, and several others, each labeled with its name and length denoted in English miles. The rivers are drawn with thin, squiggly lines to represent their paths and tributaries, illustrating branching complexities.

Mountains

The bottom part highlights various mountain ranges and hills, grouped by their respective continents or regions. Mountains are illustrated as triangular peaks, with varying heights labeled along the right side, from 0 to 28,000 feet. Peaks labeled include famous ones like Chimborazo, Mont Blanc, and the Himalayas, with some depicted with snow caps to denote snow lines. The chart also includes hills and mountain ranges from different continents like Europe, America, Asia and Oceania, and Africa, each grouping indicated by textual labels. Topographical features are depicted with a combination of colors: green for lower elevations, transitioning to brown and grey for higher elevations, and white peaks where snow is present.

Back to Example 3.